ASUS AI Pod feat. Nvidia GB200 NVL72 GPUs

ASUS AI Pod

feat. Nvidia GB200 NVL72

In a rapidly evolving world of extreme AI and HPC requirements, the ASUS AI Pod is not just a game-changing hardware solution – it’s a complete ecosystem.

The system’s innovative architecture leverages cutting-edge hardware and a comprehensive software stack to deliver a scalable, robust, and highly efficient platform for research, development, and production environments that features:

- Up to 72 Blackwell GPUs

- Up to 36 Grace CPUs

- NVLink networking technology

- Advanced Liquid Cooling

- Scale-up Rack Form Factor

Ready for a quote?

📞 Give us a call (888) 828-7646 (POGO)

💻 Schedule a meeting with our sales team

📧 Drop us an email at sales@pogolinux.com

The best choice for AI workloads

from edge to data center

ASUS AI Pods are built with cutting-edge NVIDIA GPUs and networking hardware, ensuring top-tier performance in standardized software environments.

- Reduce cost by up to 50% Integrate network services, virtualization and storage services

- Better Performance Benefit from high-speed processing, and reduced latency, enabling faster

- Seamless Migration Ensure a smooth transition from legacy hypervisors like VMware or Hyper-V

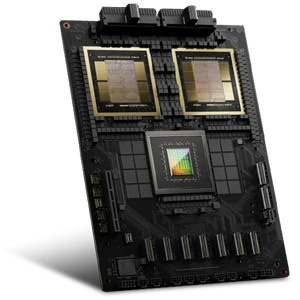

Nvidia Blackwell GPUs

AI Servers

The core computing units of the AI POD solution are NVIDIA GB200 NVL72 GPUs with flagship Blackwell-architecture 208-billion transistors with TSMC 4NP process. This revolutionary AI server is built to tackle large-scale AI applications with unmatched processing-power and energy-efficiency.

Nvidia Grace CPUs

AI Servers

AI Servers form the foundation of the high-density compute nodes. These servers are specially designed to house multiple GB200 GPUs and are optimized for computing power.

General-Purpose CPUs

Intel Xeon Servers

In addition to AI servers, the architecture includes ASUS RS Series Rack Servers. These servers handle management and supporting workloads such as cluster management, monitoring and other tasks, enhancing the overall system's versatility.

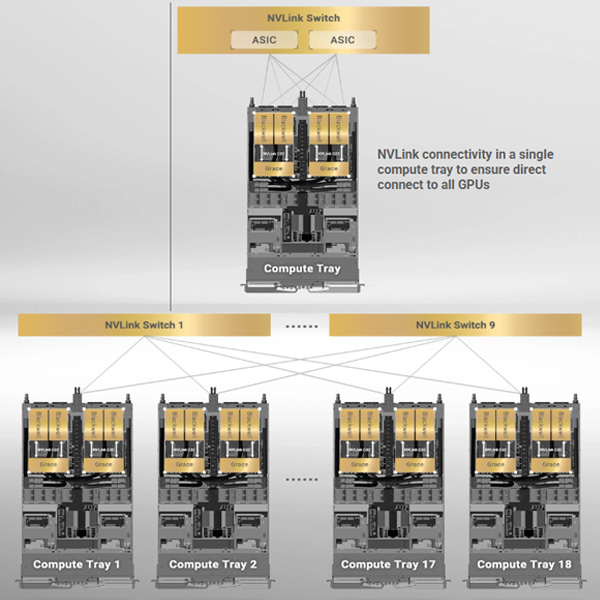

Nvidia NVLink

High-speed Interconnect

Blackwell GPUs are interconnected via high-speed 5th-gen NVLink & NVLink Switch 1.8 TB/s GPU-GPU interconnect to deliver extreme AI and HPC performance. This is further enhanced by a robust networking infrastructure, including NVIDIA Quantum-2 InfiniBand and Spectrum-4 Ethernet switches, ensuring low-latency, high-bandwidth communication across the cluster.

Nvidia Certified

Artificial Intelligence Solution

The ASUS AI Pod solution have been tested and verified by NVIDIA. With this NVIDIA certification, the ASUS AI Pod server system is purpose-built to meet rigorous performance and reliability standards, particularly for resource-intensive applications such as AI, deep learning and data analytics.

Integrated AI Solution for Demanding Workloads How it Works

Designed to meet the most demanding workloads, the ASUS AI POD integrates cutting-edge hardware, advanced networking, and a comprehensive software stack.With its innovative architecture, the ASUS AI POD leverages NVIDIA Grace Blackwell superchip AI technology (GPUs/CPUs), interconnected via high-speed NVLink.

ASUS-Nvidia

AI Integration Partner

Pogo Linux has partnered with ASUS to offer the AI Pod specifically configured for this unique AI-ML applications, deep learning and accompanying datasets and data analytics.

- Comprehensive 3 Year Limited Warranty Every system we ship is backed by our two decades of system design experience.

- Advance Parts Replacement Our engineering team puts every system through a stringent series of tests to ensure flawless performance and compatibility.

- Direct Access to Expert Support Team Technological expertise continues after the sale, as we provide a robust three-year warranty accompanied by our first class support..

Have an upcoming AI Project? Let's talk.

Whether your AI-ML projects are in development, training models and ingest stage, or inference outputs, Pogo Linux has integrated AI solutions, GPU workstations and data-processing compute servers for any on-premises project.